Answers Interrater Reliability Teaching Strategies Gold Cheat Sheet

Have you ever felt that sinking feeling when different people look at the same thing and see something completely different? Perhaps one teacher grades a paper harshly while another is very lenient, or maybe two researchers interpret data in wildly opposing ways. That sort of inconsistency, that very real challenge, can really mess with fairness and the quality of information we gather, you know? It's a big deal in so many areas, from school classrooms to important research studies, so it's almost a universal problem, in a way.

Making sure everyone sees things the same way, or at least very similarly, is what we call interrater reliability. It sounds a bit technical, but it's basically about getting consistent "answers" from different evaluators. When we can't count on that consistency, the value of our assessments or our data collection efforts goes down quite a bit. It means the insights we think we're getting might not be as solid as we hope, which is a bit concerning, isn't it?

This piece is all about giving you some really practical ways, some teaching strategies, to get everyone on the same page for assessments. We're talking about a "gold cheat sheet" here, packed with ideas that can help you improve how consistent people are when they're evaluating things. You see, getting good, dependable "answers" is really important, and this guide aims to make that a lot easier for you, as a matter of fact.

- Union Pines Wrestling

- Bronte London Restaurant

- Airbnb Interior Design Services

- Paige Maddux Husband

- The Ultimate Prom And Bridal

Table of Contents

- What is Interrater Reliability, Anyway?

- Why Consistent Answers Matter So Much

- Common Stumbles on the Path to Agreement

- Your Gold Cheat Sheet for Teaching Strategies

- Making Your Cheat Sheet Truly Gold

- Frequently Asked Questions

- Getting to Better Answers, Together

What is Interrater Reliability, Anyway?

Interrater reliability, sometimes called inter-observer agreement, just means how much two or more people agree on what they see or what they're evaluating. Think about it this way: if you have a team of judges scoring a gymnastics routine, you want them all to give similar scores for the same performance. If their scores are all over the place, well, that's a problem, isn't it? It suggests their "answers" aren't reliable.

This concept is pretty important across a bunch of different fields. In education, it helps make sure that grades are fair, no matter who is doing the grading. In research, it helps ensure that data collected by different people is consistent and trustworthy. It's about getting dependable information, so you know you can really count on what you're seeing, more or less.

When we talk about getting "answers," we're not just talking about simple questions. We're talking about consistent judgments, observations, or scores. It's about reducing the chance that someone's personal opinion or their mood on a given day will mess up the results. That's really what we're aiming for, you know, a fair shot for everyone and dependable data.

- Parade Of Paws Rescue

- Cole Young Metalwood

- Matt Walker Mx

- Watson Supply Weed

- Valley Wings Flint Burton Photos

Why Consistent Answers Matter So Much

Why should we even bother with all this effort to get consistent "answers"? Well, for one thing, it builds trust. If students know their work will be judged fairly, no matter which teacher grades it, they trust the system more. Similarly, in research, if data collectors are consistent, the research findings become much more believable. It's a pretty big deal, honestly.

Think about it like in sports, where you have detailed statistics on players, teams, and results. You expect those numbers to be gathered and recorded consistently, no matter who is doing the record-keeping. If one person counts a "foul" differently than another, those statistics become less useful, right? Consistent "answers" give us a solid foundation for making good decisions, so that's something to think about.

Without good interrater reliability, our "answers" can be misleading. Imagine a bone scan where increased uptake can mean many things, like infection or cancer. If different doctors interpret the same scan differently, it could lead to wrong diagnoses. That's a very serious consequence, isn't it? Getting it right, consistently, is really important for good outcomes.

Common Stumbles on the Path to Agreement

Getting people to agree consistently isn't always easy. One common problem is unclear guidelines. If the rules for evaluation are fuzzy, everyone will just make up their own rules, and then you have a mess. Another issue is a lack of practice. People need chances to try out the evaluation process and get feedback before it really counts.

Sometimes, people also have different ideas about what "good" or "bad" looks like, even with guidelines. This is where personal bias can creep in. Maybe someone has a favorite type of answer, or they're a bit harder on certain kinds of mistakes. These little differences can add up and really throw off the consistency of "answers," you know.

Then there's the sheer complexity of what's being evaluated. Some things are just hard to judge, like creativity or the nuance of a conversation. When things are really complex, it's even harder to get everyone to see eye-to-eye, and that's a challenge we often face, as a matter of fact.

Your Gold Cheat Sheet for Teaching Strategies

So, how do we get everyone on the same page? How do we teach people to give consistent "answers"? This "gold cheat sheet" gives you some really practical strategies. These ideas are about more than just telling people what to do; they're about helping them really understand and apply the criteria consistently, you know.

Strategy 1: Crystal-Clear Guidelines

This is probably the most important step, honestly. You need evaluation guidelines that leave no room for guesswork. Think of them as the "help words" in a game, giving clear clues to assist players. These aren't just suggestions; they are precise instructions for how to judge something.

Use rubrics with very specific examples for each level of performance. Instead of saying "good," describe what "good" looks like in detail. For instance, if you're evaluating a written piece, specify what makes a "strong thesis statement" versus a "developing thesis statement." It's about being really, really clear, you know.

When creating these guidelines, it's often helpful to involve the people who will be using them. Their input can make the guidelines more practical and easier to follow. This way, they feel a sense of ownership, which can really help with buy-in, as a matter of fact.

Strategy 2: Practice Makes Perfect (Well, Almost)

Just like you wouldn't expect someone to be a great athlete without practice, you can't expect consistent evaluators without practice. This is where interactive study modes and flashcards come in handy, much like when you start studying derivative classification flashcards to learn terms and definitions.

Give people sample items to evaluate. Then, have them compare their "answers" to a "gold standard" set of evaluations or to each other's. This hands-on experience helps them see where they're off and where they're hitting the mark. It's a really effective way to learn, honestly.

You could even create little quizzes or scenarios where they have to apply the guidelines. The more chances people have to practice and get immediate feedback, the better they'll become at giving consistent "answers." It's about building that muscle memory for evaluation, you know.

Strategy 3: Talk It Out: Calibration Sessions

This is where the magic really happens. Get all the evaluators together to discuss their "answers" on the practice items. This isn't about pointing fingers; it's about understanding *why* different "answers" happened. It's a bit like a team huddle, where everyone shares their perspective.

During these sessions, you can discuss those tricky cases, the ones that are hard to categorize. Why did one person score it high and another low? What specific parts of the guidelines did they focus on? This conversation helps everyone refine their understanding of the criteria and how to apply them. It's very collaborative, honestly.

These discussions also help to identify any ambiguities in your guidelines. If several people are confused by the same part, then you know you need to clarify that section. It's a great way to fine-tune your "cheat sheet" and make it even better, so that's a good thing, isn't it?

Strategy 4: Feedback Loops for Growth

Learning doesn't stop after the initial training. People need ongoing feedback on their "answers." This can be done by having a lead evaluator periodically review a sample of their work and provide specific, constructive comments. It's about helping them grow, you know.

Just like the acronym REACT helps in certain situations by providing a structured response, a structured feedback process can help evaluators improve. Point out specific instances where their "answers" differed from the group consensus or the "gold standard." Offer suggestions for how they can adjust their approach.

This feedback shouldn't just be one-way. Encourage evaluators to ask questions and share their own challenges. A supportive environment where everyone feels comfortable discussing their "answers" openly will lead to better overall consistency, which is really what we want, isn't it?

Strategy 5: Tech Tools to Lend a Hand

Technology can be a real helper in achieving better interrater reliability. There are software programs that can help you create and manage rubrics, track evaluator "answers," and even calculate agreement statistics. These tools can save a lot of time and make the process much more efficient.

Online platforms can also make it easier to conduct training and calibration sessions remotely, which is great if your evaluators are spread out geographically. You can share materials, conduct live discussions, and even have people practice evaluating samples together in real-time. It's a pretty neat way to get things done, honestly.

Some tools even allow for anonymous rating, which can reduce bias. When people know their "answers" are being recorded and compared, it tends to make them more careful and thoughtful in their evaluations, so that's a good benefit, you know.

Making Your Cheat Sheet Truly Gold

To make your "answers interrater reliability teaching strategies gold cheat sheet" truly valuable, remember that it's a living document. It's not something you create once and then forget about. You should review and update your guidelines regularly, especially after calibration sessions reveal areas of confusion.

Think about it like general sports, where rules and interpretations can sometimes evolve over time. What was considered a foul ten years ago might be called differently today. Similarly, your evaluation criteria might need to adapt as the nature of the work or the context changes. Staying current is really important, you know.

Also, foster a culture where asking questions about criteria is encouraged. When people feel safe to say, "I'm not sure how to score this," it's a chance to clarify and strengthen everyone's understanding. This open communication is a key ingredient for consistent "answers," as a matter of fact.

Frequently Asked Questions

People often have questions about getting consistent evaluations. Here are a few common ones:

What is the best way to measure interrater reliability?

Well, there are a few ways, honestly. For categorical data (like "pass" or "fail"), you might use Cohen's Kappa or Fleiss' Kappa. For continuous data (like scores on a scale), you could use Intraclass Correlation Coefficient (ICC). The best method depends on the type of "answers" you're collecting and how many evaluators you have, you know.

How much agreement is "good enough"?

That's a lovely question, and it really depends on what you're evaluating. For high-stakes decisions, like medical diagnoses or standardized tests, you want very high agreement, perhaps 0.80 or higher on a Kappa scale. For less critical things, a lower level might be acceptable. It's about balancing practicality with the importance of the "answers," so that's something to consider.

Can interrater reliability be improved over time?

Absolutely, it can! With consistent training, clear guidelines, regular calibration sessions, and ongoing feedback, people's ability to provide consistent "answers" will definitely get better. It's not a one-time fix; it's an ongoing process of learning and refinement, as a matter of fact.

Getting to Better Answers, Together

Getting consistent "answers" from different evaluators is a challenge, but it's one we can definitely overcome. By using clear guidelines, providing plenty of practice, talking things through, giving good feedback, and using helpful tools, you can really improve how reliable your evaluations are. Remember, "Answers is the place to go to get the answers you need and to ask the questions you want." This applies to how we approach getting consistent evaluations, too.

Think of this "answers interrater reliability teaching strategies gold cheat sheet" as your guide to building a system where fairness and accuracy are paramount. It's about empowering everyone involved to give reliable "answers," which helps us all make better decisions and gain more trustworthy insights. For more great ideas on how to make your evaluations shine, you can always Learn more about consistent evaluation practices on our site, and maybe even check out some tips on effective grading practices.

This effort to get consistent "answers" is really about building confidence in your data and your assessments. When everyone is on the same page, the information you gather becomes much more powerful and useful. It's a continuous journey, but with these strategies, you're well on your way to achieving that "gold standard" of agreement, you know.

For further reading on measurement consistency in various fields, you might find resources from the American Educational Research Association helpful. They often discuss methods for improving data quality and rater agreement in educational settings.

- Super Mrkt Los Angeles

- Black Wolf Harley Davidson Bristol Va

- The Battersea Barge

- Katy Spratte Joyce

- Club Level 4

Answers Interrater Reliability Teaching Strategies Gold Chea

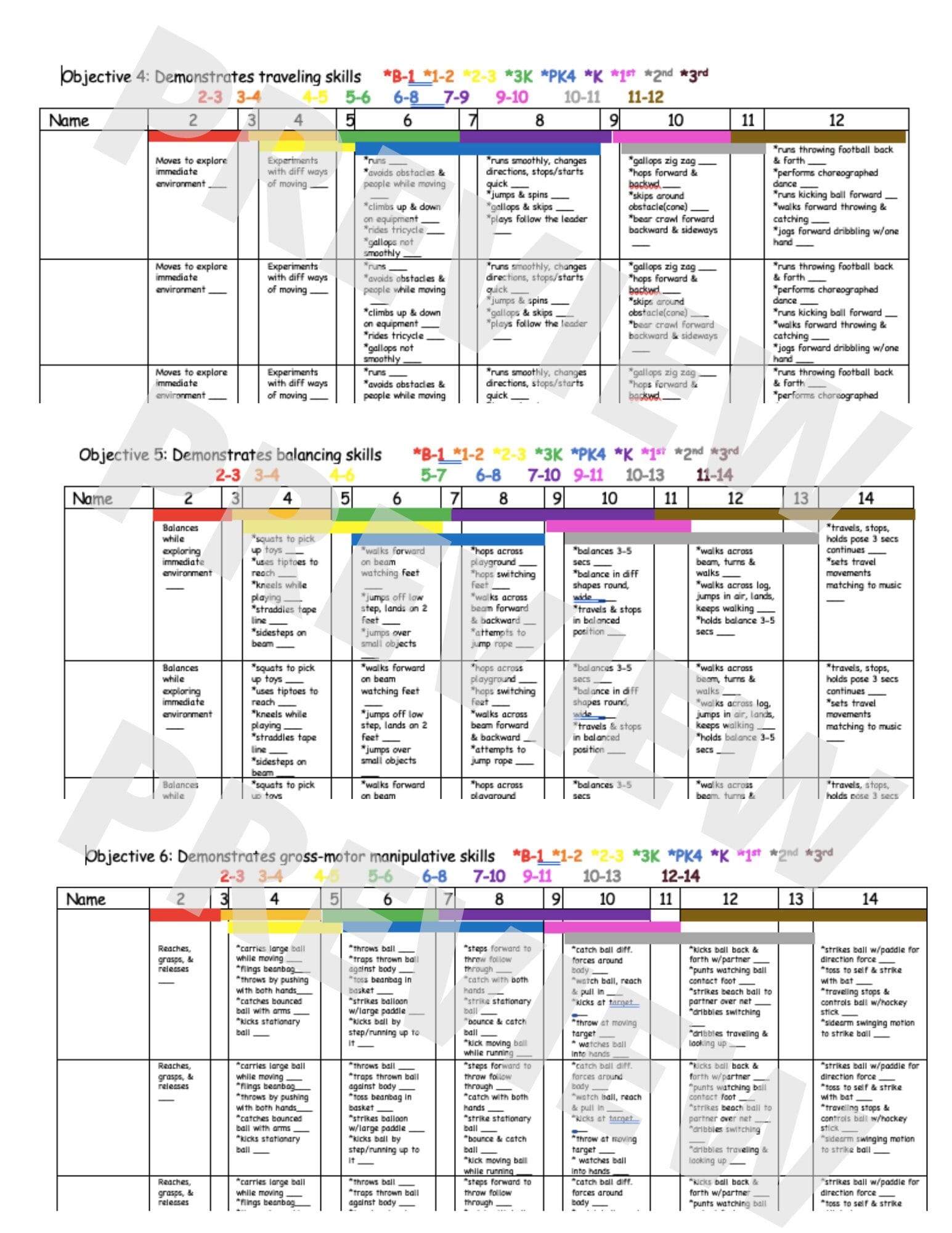

Cheat Sheet Printable Teaching Strategies Gold Objectives Checklist

Teaching Strategies GOLD - Formative Assessment for Early Learning